In 2026, Generative AI development cost has moved from an experimental line item to a boardroom priority. What once felt like a research expense is now a measurable driver of efficiency, product innovation, and customer experience. Yet most enterprises still struggle to answer a simple question—what will it actually cost to build and scale a Generative AI solution?

The answer is neither static nor mysterious. Like any engineering initiative, the investment depends on scope, model strategy, and operational depth. Small pilots that fine-tune existing models might start around $20,000–$60,000, while mid-size AI apps integrating retrieval, analytics, and security layers often reach $60,000–$250,000. At the enterprise end, complex multi-domain programs can range from $400,000 to $1 million or more, depending on compliance, data maturity, and integration complexity.

These figures are no longer speculative—they reflect the global shift from proof of concept to production-grade AI. Enterprises across healthcare, finance, logistics, and retail now treat AI as infrastructure. That means budgets must be defensible, repeatable, and directly linked to KPIs such as operational ROI, time-to-decision, or customer satisfaction.

This guide breaks down everything you need to build that clarity. It explains what drives the cost of developing a Generative AI solution, how to map each budget line to business value, and how to keep your AI app development pricing predictable as adoption scales.

By the end, you’ll have a working cost framework—not a guess. You’ll see how to move from a rough estimate to a transparent, data-backed AI budget you can take to your CFO with confidence.

Typical Generative AI Development Cost Ranges in 2026

Why There’s No Single Price Tag for Generative AI

No two AI builds are alike — because no two problems are alike. The Generative AI development cost for an internal assistant trained on your company’s documents will differ sharply from a global e-commerce recommendation engine or a healthcare diagnostic workflow.

The right way to estimate cost isn’t by asking “What’s the average?” but by understanding “What drives my total?” Scope, model selection, data quality, and compliance expectations shape your budget far more than line-by-line code hours ever will.

Still, for planning purposes, directional cost bands help leadership teams forecast investments and prevent “AI sticker shock.” Below are the most accurate 2026 reference ranges drawn from enterprise projects Quokka Labs and other leading AI-native teams have delivered

Indicative Generative AI Development Cost Bands (2026)

| Project Scale |

Typical Cost Range (USD) |

Description |

Best For |

| Small / Focused Assistant |

$20K – $60K |

A compact, single-workflow solution using hosted APIs or lightweight fine-tuning. |

Departmental pilots, internal copilots, content tools |

| Mid-Size Custom App |

$60K – $250K+ |

Retrieval-augmented app with 2–4 integrations, dashboards, and moderate data pipelines. |

Customer-facing or cross-functional internal AI tools |

| Enterprise-Grade Program |

$400K – $1M+ |

Multi-domain architecture, complex data governance, multi-region deployment, and dedicated MLOps. |

Regulated sectors, global SaaS platforms, R&D heavy products |

Note: These ranges are directional, not quotations. The cost of developing a Generative AI solution depends on your actual data condition, security profile, and the complexity of integrations.

Why These Ranges Vary

1. Scope and Use-Case Depth

A chatbot that summarizes PDFs inside one department is cheap to validate. A multi-tenant enterprise assistant that spans departments, supports multilingual data, and integrates with ERP or CRM systems multiplies effort across authentication, API load balancing, and compliance.

2. Model Strategy

Model choice is the biggest multiplier in AI app development pricing.

- API-based (Hosted LLM): Low build cost, higher variable token usage.

- Fine-tuned Model: Mid-range cost, better accuracy for domain-specific tasks.

- Custom-trained Model: High upfront investment but complete control over latency, privacy, and IP ownership.

Each approach trades capital cost for flexibility and long-term run-rate.

3. Compliance and Governance

If your industry falls under HIPAA, GDPR, or FINRA regulations, expect additional spend on audit trails, consent handling, encryption, and model explainability. These non-functional layers can add 10–25 % to the total cost — but they are the price of trust.

4. Scale and Performance Expectations

Proof-of-concept builds usually serve a few internal users. Production deployments handle thousands of concurrent requests, real-time observability, and failover systems. That translates into additional cloud spend and engineering hours.

5. Data Readiness

Clean, structured data saves money. Disorganized datasets inflate labeling and cleaning cycles. Many projects underestimate data engineering costs — often 30–50% of the total.

Quokka Labs Insight: Plan for Range, Not Precision

Enterprises rarely hit their initial AI budget exactly. Instead, successful teams plan for range and elasticity. Treat early estimates as directional maps, not contracts. What matters more than the dollar figure is how you track cost against value. Your first assistant might cost $60K but save 2,000 labor hours per quarter. That’s ROI you can scale, not just spend.

When planning your Generative AI development cost or evaluating Generative AI development services, anchor around business outcomes rather than technical ambition. If a feature doesn’t move a KPI, defer it. If a data source adds risk but not accuracy, exclude it. Every trimmed dependency compound savings over time.

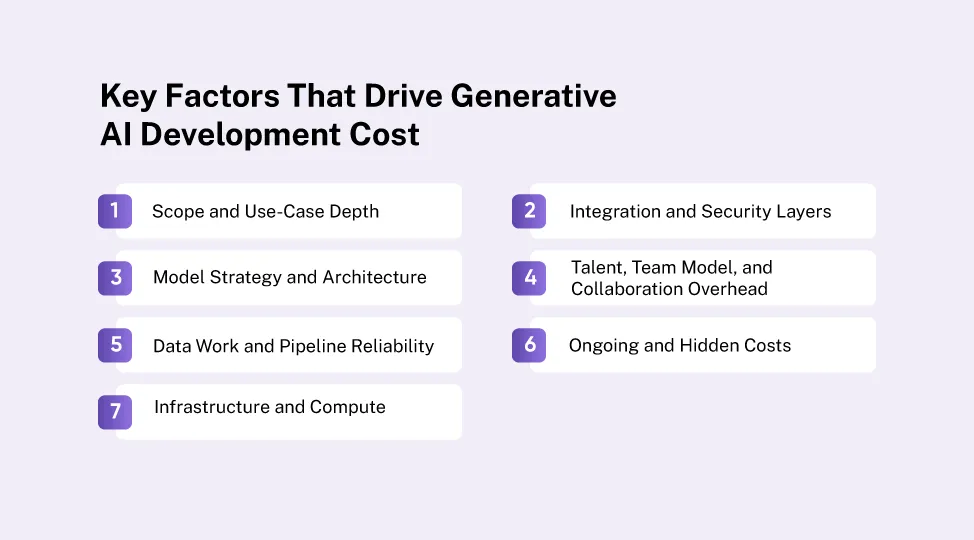

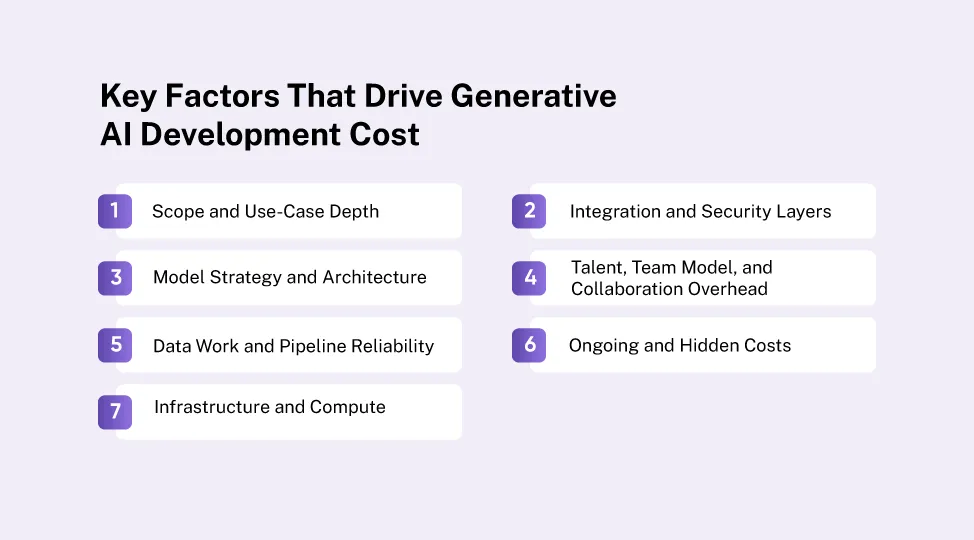

Key Factors That Drive Generative AI Development Cost

Every enterprise team planning an AI build eventually hits the same question: Where does the money actually go?

Understanding these drivers is the fastest way to control the Generative AI development cost. Each factor — from scope to operations — adds or subtracts time, compute, and risk. Knowing them upfront helps you budget smarter and scale with fewer surprises.

1. Scope and Use-Case Depth

Scope defines complexity, and complexity defines cost.

A single-workflow assistant that helps a support team summarize tickets might take 6–8 weeks and stay well within a $40K–$60K range. But once you expand to multi-department logic, multilingual responses, analytics dashboards, and SSO/SCIM integrations, your effort multiplies — and so does the bill.

For regulated industries, factor in additional consent handling, redaction, and audit logging layers. Each of those requirements can add 10–20% to your total spend but skipping them risks compliance fines that dwarf development savings.

2. Model Strategy and Architecture

Model choice is the heart of AI app development pricing. The difference between using a hosted API and training your own model is the difference between renting compute and owning infrastructure.

Tip: Define the “Version-1 must-have” before you start. Everything else can go in a later phase once your ROI is proven.

| Model Approach |

Description |

Cost Profile |

When to Use |

| API-Only / Managed LLLM |

Call existing APIs (like GPT-4, Claude, Gemini). Minimal setup, fast to launch. |

Low upfront, variable token cost. |

MVPs, internal pilots, low compliance risk. |

| Fine-Tuned Model |

Customize open or hosted models with your domain data. |

Moderate cost, better task alignment. |

When accuracy or tone consistency matters. |

| Custom Model Training |

Train from scratch using proprietary data and architecture. |

High cost, full control. |

Enterprise-grade systems, IP ownership, latency control. |

Each jump in control adds cost but reduces dependency. Many enterprises start with hosted APIs, validate ROI, and then shift to hybrid fine-tuning once traffic justifies the investment.

3. Data Work and Pipeline Reliability

Data is the most underestimated cost center in AI projects.

Even with pre-trained models, real-world data is messy, incomplete, and often locked across systems.

Budget realistically for:

- Data sourcing and cleaning

- Labeling and annotation

- PII masking and compliance workflows

- Pipeline reliability and version control

Bad data multiplies rework, evaluation failures, and token waste. In most enterprise builds, data engineering consumes 30–50% of the total cost of developing a Generative AI solution.

Tip: Start with smaller, high-quality datasets and expand gradually. Better data outperforms bigger data almost every time.

4. Infrastructure and Compute

Infrastructure spend is directly tied to token usage, context length, and concurrency.

- Cloud vs On-Prem: Cloud offers elasticity and speed; on-premises gives compliance control.

- Compute class: GPU/TPU selection impacts throughput and inference latency.

- Monitoring: Caching and load balancing lower per-request cost.

Ignoring this layer leads to “runaway bills” — when token consumption scales faster than revenue. A mid-size app serving 10,000 queries/day can see monthly compute costs rise from $2K to $20K without proper quota and caching logic.

Pro move: Cap tokens per session, use smart retrieval to trim context, and track cost per successful output, not per API call.

5. Integration and Security Layers

Integrations make AI useful; security makes it viable.

Connecting your AI system with CRMs, ERPs, or databases adds tangible value but also adds authentication, role management, and observability layers.

Each integration can add 5–10% to your overall build cost, depending on complexity. Security and privacy reviews add another 10–15% for enterprises handling sensitive data.

If your AI solution interacts with payments, patient records, or trading data, budget additional time for segregation testing, approval gates, and rollback systems.

6. Talent, Team Model, and Collaboration Overhead

Your team structure influences both velocity and cost efficiency.

- In-house: Maximum control and continuity but higher fixed cost.

- Outsourced: Lower upfront cost and faster delivery but potential knowledge gaps.

- Hybrid (most common): Retain product and data ownership internally, partner with an AI-native firm like Quokka Labs for architecture, model ops, and scalability.

Rates vary widely by region, skill depth, and contract model. Senior ML engineers or data architects typically bill 2–3× more than application developers, but their impact on re-work avoidance pays off exponentially.

7. Ongoing and Hidden Costs

The Generative AI development cost doesn’t stop at launch — it evolves.

Enterprises often overlook post-deployment operations:

- Monitoring and MLOps

- Model retraining and red-teaming

- Token optimization

- Prompt library maintenance

- Feature drift detection

- Continuous evaluation sets

Plan for a recurring run-rate, typically 15–30% of your initial build cost per year. Treat it as operational expenditure (OpEx) rather than a surprise expense.

Breaking Down the Budget — Line-Item View

A realistic AI budget isn’t a single figure; it’s a composition of many interlocking parts.

Each line item represents a real engineering effort — from discovery to deployment — that contributes to the total Generative AI development cost.

Seeing these components clearly helps decision-makers allocate resources intelligently, compare bids fairly, and ensure that every dollar spent aligns with measurable business outcomes.

The Core Budget Layers

At Quokka Labs, we treat AI budgeting as a system, not a spreadsheet.

Each layer builds on the last — strategic clarity first, technical depth next, and operational sustainability last.

Here’s how that translates into cost reality:

| Budget Component |

Typical Cost Range (USD) |

Purpose |

Notes / Optimization Insight |

| 1. Discovery & Feasibility |

$4K – $15K |

Clarify the problem, define KPIs, identify risks. |

Invest here to prevent downstream rework. A precise discovery phase saves up to 25% of total project cost. |

| 2. UX/UI & Service Design |

$5K – $40K |

Design intuitive interfaces, conversation flows, and feedback loops. |

Early design of prompts in UI improves retention and reduces hallucination risk. |

| 3. Model Work |

Varies (can dominate) |

Selection, fine-tuning, evaluation, and safety testing. |

Start with hosted APIs; fine-tune only when ROI is proven. |

| 4. Data Engineering & Pipelines |

$10K – $80K |

Ingestion, transformation, metadata, and privacy compliance. |

Clean data saves compute and reduces token waste. |

| 5. App / API Development |

$15K – $100K |

Backend, retrieval, vector search, auth, and integration logic. |

Modular architecture allows future upgrades without rebuild. |

| 6. Infrastructure & Compute |

$5K – $50K+ (initial) |

Model hosting, caching, GPU allocation, and scaling. |

Use dynamic quotas and caching to prevent exponential cost growth. |

| 7. Testing, QA & Security Reviews |

$5K – $25K |

Functional, load, and penetration testing; threat modeling. |

Always protect this line — underfunded QA leads to instability and audit delays. |

| 8. MLOps & Monitoring |

$10K – $40K |

Logging, drift detection, performance dashboards. |

Build lightweight first; automate evaluation as usage scales. |

| 9. Launch & Maintenance |

$5K – $25K+ (recurring) |

Documentation, support, retraining, model updates. |

Plan 15–30% of build cost per year as maintenance OpEx. |

Insight: If a budget line doesn’t clearly support a KPI or mitigate a known risk, defer it. Leaner projects deliver faster feedback and lower cost per iteration.

Example: Cost Distribution for a Mid-Size AI Application

| Category |

Approx. % of Total |

Strategic Focus |

| Discovery & Design |

10–15% |

Clarity and alignment |

| Data Work |

25–35% |

Foundation for model accuracy |

| Model & Infra |

20–30% |

Core intelligence and scalability |

| Integrations & QA |

15–20% |

Security and reliability |

| MLOps & Maintenance |

10–15% |

Sustainability and evolution |

This ratio ensures the budget isn’t consumed by glamour tasks like model tuning while neglecting essentials such as evaluation and monitoring — the silent killers of AI ROI.

Project Type vs. Directional Build Bands

| Project Type |

Scope |

Directional Build Band (USD) |

| Focused Assistant |

API model, light retrieval, single sign-in |

~$20K – $60K |

| Mid-Size App |

RAG setup, 2–4 integrations, dashboards, monitoring |

~$60K – $250K+ |

| Enterprise Program |

Multi-domain, strict controls, multi-region deployment |

~$400K – $1M+ |

In-House vs. Outsourcing vs. Hybrid Generative AI Development Cost — What’s Smarter for 2026?

Choosing how to build your AI product is as important as deciding what to build.

Your team model — whether in-house, outsourced, or hybrid — directly determines cost, velocity, and long-term flexibility.

By 2026, enterprises are no longer asking if they should invest in AI but how to sustain that investment without locking themselves into fragile architectures or runaway spending.

Let’s break down how each approach affects the Generative AI development cost, knowledge continuity, and scalability.

1. In-House Development

Definition:

Your own data scientists, developers, and architects handle everything — from strategy to deployment.

| Advantages |

Trade-Offs |

| Full ownership of IP, data, and model logic. |

Higher fixed costs (salaries, benefits, infrastructure). |

| Direct control over roadmap and security. |

Slower time-to-market if team lacks prior GenAI experience. |

| Strong internal knowledge retention. |

Difficult to scale hiring during spikes or new initiatives. |

Cost Range (Typical):

$250K–$1M+ for enterprise programs, depending on headcount and tech stack.

When It Works:

In-house development is ideal for organizations treating AI as a core differentiator, not a feature. Think healthcare providers building HIPAA-compliant diagnostic tools or financial institutions creating proprietary fraud detection models.

Insight: In-house AI success depends on leadership buy-in. Without a dedicated AI program owner and MLOps maturity, costs climb but velocity doesn’t.

2. Outsourcing to AI Specialists

Definition:

You partner with an external AI development company like Quokka Labs that provides ready accelerators, proven frameworks, and technical depth.

| Advantages |

Trade-Offs |

| Access to specialized AI architects, prompt engineers, and MLOps experts. |

IP and data handling require strict governance agreements. |

| Faster delivery cycles using pre-built templates and reusable modules. |

Limited internal knowledge retention unless co-development is planned. |

| Predictable cost structure via project-based contracts. |

Over-dependence on vendor if ownership transfer isn’t clear. |

Cost Range (Typical):

$60K–$400K+ depending on scope, integrations, and compliance level.

When It Works:

Outsourcing fits when you need to validate an AI idea fast or your internal team is at capacity.

It also suits companies exploring AI app development pricing before investing in permanent teams.

Pro Tip: Choose partners who can deliver transparency in their cost models — token tracking, model usage dashboards, and phased delivery reports should be part of the contract.

3. Hybrid Collaboration Model

Definition:

A balanced model — you retain product, data, and domain ownership internally, while an external partner provides specialized expertise for modeling, infrastructure, and scaling.

| Advantages |

Trade-Offs |

| Best of both worlds — speed and ownership. |

Requires clear coordination and shared tooling for communication. |

| Keeps sensitive data internal while leveraging external expertise. |

Slightly higher management overhead than fully outsourced builds. |

| Flexible scaling — add or reduce partner capacity as needs evolve. |

Budgeting complexity due to mixed billing models. |

Cost Range (Typical):

$100K–$600K+ depending on duration and partner role.

When It Works:

Hybrid collaboration is the preferred enterprise model for 2026.

It aligns perfectly with agile AI programs where governance, compliance, and rapid iteration coexist.

For example, your in-house data team handles pipelines and governance, while Quokka Labs engineers the retrieval, model integration, and MLOps backbone.

Quokka Labs Perspective: Hybrid collaboration consistently delivers 30–40% faster time-to-market and reduces total cost of developing a Generative AI solution by eliminating redundant effort.

Decision Framework — Which Model Fits You?

| Criteria |

In-House |

Outsourced |

Hybrid |

| Speed to Market |

Moderate |

Fastest |

Fast |

| Cost Predictability |

Variable |

High |

Medium-High |

| Knowledge Retention |

Maximum |

Low |

High |

| Scalability |

Slow |

Moderate |

Flexible |

| Best For |

AI-centric enterprises |

MVPs, pilots, experiments |

Mature organizations scaling validated AI |

Rule of Thumb:

- Start outsourced if speed and validation matter most.

- Move hybrid as adoption and traffic grow.

- Scale in-house once AI becomes mission-critical IP.

Governance, IP, and Security Considerations

Regardless of your model, cost optimization without governance is short-lived.

- Implement a shared risk matrix between vendors and internal stakeholders.

- Establish IP clauses early — model weights, data pipelines, and prompt libraries should have clear ownership paths.

- Conduct periodic audits of compute usage, data access, and model updates.

These measures prevent “AI sprawl,” where multiple disconnected initiatives silently inflate the Generative AI development cost across departments.

How to Estimate Your Own Generative AI Development Cost (Step-by-Step)

Budgeting for Generative AI is not guesswork — it’s systems thinking.

The smartest enterprises treat AI cost planning the same way they treat infrastructure scaling: measurable, modular, and revisable.

Below is a step-by-step method to estimate your Generative AI development cost that you can adapt directly into a spreadsheet or internal planning document.

Each stage links technical effort with a financial outcome so you can defend your numbers in front of finance and leadership teams.

Step 1: Define the Use Case and KPIs

Start with clarity, not ambition.

Write your use case in one sentence:

“Reduce support resolution time by 25% with an AI assistant trained on our knowledge base.”

Then attach quantifiable KPIs: mean response time, ticket deflection rate, or customer satisfaction (CSAT).

When the outcome is measurable, every dollar spent has a performance anchor — turning a speculative AI app development pricing discussion into an investment plan.

Step 2: Choose the Model Approach

Your model decision defines both cost and flexibility. Use this framework:

| Model Strategy |

Initial Cost |

Operational Cost |

Best For |

| Hosted API (GPT-4, Gemini, Claude) |

Low |

High (per-use token fees) |

MVPs, pilots, low compliance needs |

| Fine-Tuned Model (Open or Private) |

Medium |

Medium |

Domain-specific accuracy, moderate volume |

| Custom Model Training |

High |

Low (controlled infra) |

Long-term IP ownership, high privacy contexts |

Insight: Many enterprises over-invest early. Start with hosted APIs to benchmark ROI, then transition to fine-tuned or custom models once usage justifies the spend.

Step 3: Quantify Data Work

Data is the largest and least predictable part of the cost of developing a Generative AI solution.

Budget for data preparation as its own mini-project — not a side task.

Estimate cost by asking:

- How many data sources need integration?

- Is labeling or anonymization required?

- Are there regulatory frameworks (HIPAA, GDPR, SOC-2)?

Rough rule:

Data readiness = 30–60% of total project effort.

Poor data quality can double iteration cycles and inflate model retraining cost.

Step 4: Estimate Infrastructure and Compute

Compute cost depends on three multipliers:

- Token volume per request

- Concurrent users

- Latency targets

Forecast daily requests × average tokens × model price per 1K tokens.

Then add a 20% buffer for caching and retry logic.

Example:

A 10K-request/day app at 2K tokens per exchange with a $0.002/token rate ≈ $600/month base compute, excluding observability and redundancy.

Pro move: Treat infra spend as a variable line item. Monitor token burn per task, not per session, to tie consumption to user success rates.

Step 5: Map the Team Composition and Rates

Create a clear matrix of roles, hours, and rates.

A balanced team for a mid-size build often includes:

| Role |

Hourly Rate (USD) |

Typical Effort (Hours) |

Cost Range |

| Product Manager |

$60–$120 |

100–150 |

$6K–$18K |

| UX/UI Designer |

$50–$100 |

100–200 |

$5K–$20K |

| Backend Engineer |

$70–$150 |

200–400 |

$14K–$60K |

| Data/ML Engineer |

$90–$180 |

250–500 |

$22K–$90K |

| QA & Security Analyst |

$50–$100 |

100–200 |

$5K–$20K |

| MLOps / DevOps |

$80–$150 |

150–300 |

$12K–$45K |

These numbers flex with seniority and region, but the pattern matters more than the price — data and ML engineering usually consume the majority of build hours.

Step 6: Add Integration, Testing, and Security

Integrations and security are where hidden costs appear.

Budget explicitly for:

- Authentication & Role Management (SSO, SCIM)

- Audit Logging & Traceability

- Penetration Testing & Threat Modeling

Together, these account for 10–20% of total project cost, especially in financial, healthcare, or public-sector deployments.

Tip: Never treat compliance as “later work.” Post-launch retrofits are up to 3× costlier than proactive inclusion during design.

Step 7: Include Contingency and Run-Rate

No AI project stays static — data drifts, models evolve, and usage spikes.

Add:

- 10–20% contingency buffer for unplanned effort.

- 15–30% annual OpEx for monitoring, retraining, and infra upkeep.

This shifts your budget from project mindset to product mindset, ensuring longevity instead of fragile one-off delivery.

Step 8: Build Your Cost Estimator Sheet

Combine all line items in a simple structure:

| Category |

Low Estimate |

High Estimate |

Notes |

| Discovery & Feasibility |

$4K |

$15K |

Scope validation |

| Data Work |

$15K |

$80K |

Cleaning, labeling, governance |

| Model & Infra |

$20K |

$120K |

Hosting, fine-tuning, caching |

| Development & Integrations |

$25K |

$100K |

APIs, dashboards, auth |

| QA, Security, MLOps |

$10K |

$50K |

Testing, monitoring |

| Total Build Estimate |

$74K |

$365K+ |

Directional for 2026 mid-size apps |

This view helps leadership teams scenario-plan — “What if we scale to 3 integrations?” or “What if we delay fine-tuning until Q3?” — without restarting from scratch.

Step 9: Tie Cost to Business Value

AI spending becomes sustainable only when it’s linked to KPIs.

Measure ROI per capability, not per feature:

- $/hour saved in manual effort

- $/customer retained through personalization

- $/decision automated in workflows

When value outpaces spend, scaling becomes a strategic decision, not a financial risk.

Quokka Labs Practice: Every Generative AI engagement includes a “Value Validation Sprint” — two weeks where predicted ROI metrics are compared against actual pilot outcomes before expansion.

Step 10: Review and Adjust Quarterly

Treat your cost model as a living artifact.

Re-forecast quarterly as model efficiency, token pricing, or adoption rates shift.

This keeps your AI app development pricing predictable and prevents unmonitored drift — the silent killer of AI budgets.

Real-World Benchmarks and Case Examples

Knowing the Generative AI development cost in theory is useful.

Seeing how similar enterprises actually spent — and what they achieved — turns that theory into strategy.

These benchmarks from global Generative implementations show the practical patterns behind 2026 AI budgets: smaller pilots validate value, mid-size builds operationalize it, and enterprise programs scale it.

1. Small Pilots ($20K – $60K)

- Profile: Rapid MVPs or internal proof-of-concepts.

- Common Stack: Hosted API (GPT-4, Claude, Gemini) + minimal retrieval layer + basic analytics dashboard.

| Example |

Scope |

Outcome |

| IKEA “GPT-Store Assistant” |

Customer-facing design helper built on a hosted model; narrow task scope with defined UX. |

Delivered faster shopping recommendations; validated customer intent data for future personalization. |

| Morgan Stanley “AI @ MS Debrief” |

Internal meeting-note generator integrated with Salesforce. |

Replaced manual summarization for 20K+ advisors, saving ~5 hours per week per team. |

-

Cost Profile:

$25K–$60K for 6–10 weeks of work.

Most of the spend goes into data preparation, prompt iteration, and safe UX testing.

-

Insight: The best pilots prove value within one business quarter. They establish the baseline token economics and internal confidence for scaling.

2. Mid-Size Applications ($60K – $250K+)

- Profile: Production-ready internal tools or customer-facing features that integrate retrieval, dashboards, and secure access control.

- Common Stack: Fine-tuned or hybrid model, retrieval-augmented generation (RAG), multi-API integrations, basic MLOps.

| Example |

Scope |

Outcome |

| Duolingo Max |

GPT-4-powered “Role Play” and “Explain My Answer” modules inside premium tier. |

Increased learner retention and premium conversion; continuous fine-tuning cycle for tone accuracy. |

| Stripe + GPT-4 |

AI-assisted docs, support routing, and fraud analysis helpers. |

Reduced manual triage by ~30%; improved developer satisfaction metrics. |

| IKEA Content Governance Initiative |

AI-driven content generation and policy compliance system. |

Scaled global content ops with formal audit workflows; lowered localization cost by ~20%. |

-

Cost Profile:

$100K–$250K+ across 4–6 months.

Data pipeline development and compliance testing dominate cost; token monitoring and observability mature during this stage.

-

Quokka Labs Perspective: Mid-size builds mark the transition from experiment to infrastructure. The cost of developing a Generative AI solution here is balanced between customization and governance — both essential for enterprise trust.

3. Enterprise-Grade Programs ($400K – $1M+)

-

Profile: Multi-domain AI ecosystems spanning regions, departments, and compliance boundaries.

-

Common Stack: Custom fine-tuned or private models, batch + real-time pipelines, evaluation frameworks, and dedicated MLOps teams.

| Example |

Scope |

Outcome |

| Morgan Stanley Wealth-Management AI |

Research assistant for financial advisors; firm-wide deployment with evaluation frameworks and data lineage controls. |

Improved research turnaround by 35%; achieved full FINRA compliance. |

| Klarna AI Assistant |

Conversational service for customer support and marketing automation. |

Handled 70% of support chats autonomously; saved multimillion-dollar annual cost. |

| Mayo Clinic × Google Cloud (Vertex AI Builder) |

Clinical information retrieval and workflow augmentation. |

Accelerated case reviews by 25%; maintained HIPAA-compliant architecture. |

- Cost Profile:

$500K–$1M+ with recurring annual OpEx of ~25%.

Budgets cover R&D, multi-region deployment, auditability, and staff-on-call support.

- Enterprise Lesson: Large AI programs succeed when governance is funded early.

Cutting compliance or evaluation budgets is the fastest way to erode trust — and balloon post-launch costs.

Suggested Read: Top Use Cases of Generative AI Across Industries

4. What the Patterns Reveal

| Stage |

Primary Cost Driver |

Typical Duration |

ROI Window |

| Pilot |

Data prep, prompt iteration |

1–2 months |

1 quarter |

| Mid-Size |

Integrations, observability |

3–6 months |

2 quarters |

| Enterprise |

Governance, scaling infra |

6–12 months+ |

1–2 years |

Across all scales, three truths persist:

- Data quality outweighs data volume.

- Governance funding prevents exponential re-engineering cost.

- Token visibility decides profitability.

Tips to Control and Optimize Your Generative AI Development Cost

Even the best AI projects can spiral in cost without discipline.

Compute usage scales silently, retraining cycles expand, and infrastructure grows faster than actual adoption.

Enterprises that master Generative AI development cost optimization do one thing differently — they plan for control from day one.

Below are the proven practices Quokka Labs uses across enterprise deployments to keep budgets lean while maintaining performance and compliance.

1. Start with a Minimum Viable Product (MVP)

Launch with focus, not scope.

Your goal is to validate one measurable outcome — not to ship every feature at once.

For instance, launch a knowledge assistant that handles internal FAQs before attempting multi-lingual customer support.

- Why it works: Early validation reveals real usage patterns and saves 30–40% of wasted feature development.

- How to apply: Use a small RAG setup and hosted API model for the pilot. Expand only after metrics prove ROI.

2. Prefer Managed Endpoints Early

Hosted LLM APIs like OpenAI, Anthropic, or Gemini reduce infrastructure overhead dramatically.

They let your team focus on logic, not GPU provisioning.

- Short-term benefit: Faster time-to-market and lower capital expense.

- Long-term strategy: Migrate to fine-tuned or custom models once usage stabilizes and data privacy justifies ownership.

3. Track Tokens Like Revenue

Tokens are the new compute currency.

Treat them as metered resources — every unnecessary token is a cost leak.

Implement:

- Per-user and per-service token limits

- Caching for frequent queries

- Prompt compression and truncation logic

Why it matters:

A 15% reduction in average token count can lower monthly run costs by 25–30% for high-traffic apps.

Tip: Use dashboards (LangSmith, Weight & Biases, or Quokka’s in-house trackers) to monitor cost per successful task — not per call.

4. Use Open-Source Components Wisely

Open models and frameworks like Llama 3, Mistral, or LangChain can significantly reduce licensing and API costs.

But use them selectively — not all open tools meet enterprise-grade reliability.

- Combine open models for non-sensitive workflows (summarization, routing).

- Keep proprietary or hosted models for regulated functions (financial or healthcare decisions).

This hybrid stack optimizes spend without compromising accuracy or governance.

5. Right-Size Evaluation and Red-Team Cycles

Testing is essential, but over-engineering it early wastes resources.

Start with focused evaluation sets — small but representative — then expand as the system matures.

- Continuous micro-evals catch drift faster than massive, infrequent audits.

- Red-teaming should scale with traffic volume, not with curiosity.

6. Plan MLOps from the First Sprint

MLOps is often treated as a “Phase 2” task — that’s a mistake.

Every production AI system needs logging, monitoring, and retraining mechanisms baked in early.

- Set up data drift detection, token monitoring, and error correlation dashboards.

- Automate evaluation and retraining loops once usage scales.

This early foundation avoids outages and model decay — the most expensive failures to fix post-launch.

7. Cache Intelligently and Optimize Context Windows

Not every request needs full retrieval or long context.

Implement:

- Smart caching for repeated prompts

- Chunk-based retrieval to avoid large context windows

- Dynamic truncation for long sessions

These optimizations typically cut compute load by 20–35% in enterprise workloads while keeping latency consistent.

8. Keep a Deprecation Path

Every AI feature needs an end-of-life plan.

Kill what doesn’t move a KPI — quickly.

Archived prompts, unused datasets, and redundant retraining loops silently drain both compute and budget.

Pro Tip: Review unused features quarterly. Decommissioning one non-critical workflow can free 10–15% of annual AI OpEx.

9. Align Cost to Value

Linking spending to metrics is the most reliable optimization framework.

Each budget line — model tuning, pipeline maintenance, dashboard development — must support a measurable business outcome:

- Faster decision cycles

- Higher customer retention

- Reduced manual work

If a feature or dataset doesn’t move these needles, defer it.

10. Institutionalize Cost Governance

Make budget discipline part of culture, not crisis response.

Form a cross-functional AI cost committee — product, finance, and data engineering — that reviews model usage, token reports, and cloud spend monthly.

This proactive governance prevents invisible budget creep and keeps your AI app development pricing transparent across departments.

Generative AI Development Cost Trends – 2026 and Beyond

Generative AI is no longer experimental; it’s operational infrastructure.

By 2026, enterprises have moved from “should we invest?” to “how do we sustain growth without runaway cost?”

Understanding the forces shaping tomorrow’s budgets helps you future-proof today’s plan — keeping your Generative AI development cost predictable as the technology evolves.

1. Compute Economics and Hardware Availability

The good news: GPU efficiency keeps improving.

The catch: global demand for AI compute grows even faster.

While new chips from NVIDIA, AMD, and startups like Cerebras reduce cost per FLOP, large-scale adoption still drives total spend upward.

Trend:

- Unit compute costs may drop by 15–20% annually, but total infrastructure bills can rise 30–50% as AI usage expands across departments.

- Enterprise-grade scheduling and quota systems are becoming non-negotiable to avoid silent cost escalation.

Quokka Labs Insight: Always budget for growth, not static load. Treat compute limits as guardrails — not constraints.

2. Rise of Smaller, Smarter Models

The “bigger is better” era is ending.

Smaller domain-tuned models now match large general models for accuracy on focused tasks while consuming a fraction of the compute.

Examples:

- A fine-tuned Llama 3 model on 30B parameters can outperform larger hosted APIs for industry-specific tasks.

- Retrieval-augmented frameworks (RAG) are blending smaller models with vector search to optimize both cost and latency.

Impact:

- 40–60% cost savings for high-volume inference workloads.

- Faster response times and lower energy use.

Enterprise Takeaway:

Smaller models + smarter retrieval = same intelligence, half the bill.

3. Tooling That Automates Cost Efficiency

New AI toolchains are embedding financial intelligence into technical workflows.

Developers no longer need to guess where compute goes — dashboards now map token usage to business outcomes.

Emerging Tools:

- Intelligent routing gateways: Automatically direct requests to the cheapest capable model.

- Caching engines: Store frequent embeddings and prompt outputs.

- Vector store optimizers: Merge duplicates and trim redundant context windows.

Result:

Continuous reduction in per-request cost without sacrificing quality.

Prediction:

By 2027, enterprises that adopt AI-native cost tooling could reduce their overall AI app development pricing by up to 35%.

4. Governance Becomes a Budget Line Item

AI governance has matured from checkbox compliance to an engineering discipline.

Security, explainability, and traceability now shape architecture from day one — and they’re worth budgeting for.

Why it matters:

- Models interacting with PII or critical decisions must include evaluation pipelines, audit logs, and incident response plans.

- Governance costs 10–15% upfront but prevents million-dollar remediation after deployment.

Quokka Labs Practice: Every enterprise AI project includes a governance sprint — designing model cards, bias tests, and access audits as part of delivery, not an afterthought.

5. Shift in Talent Economics

The talent premium is evolving.

In early AI cycles, anyone with “machine learning” on their résumé commanded a premium.

By 2026, demand has shifted toward teams with shipped product experience — professionals who know not just how to build models, but how to deploy and maintain them at scale.

Trend:

- Salaries stabilize, but project efficiency improves.

- Hiring costs decline 10–15%, while productivity per engineer rises 20–25%.

What it means for you:

Your cost of developing a Generative AI solution will depend less on headcount and more on process maturity and collaboration efficiency.

6. AI-Native Infrastructure Adoption

Organizations are increasingly moving from retrofitting existing apps to building AI-native systems — platforms designed with context retrieval, evaluation, and cost-tracking built in.

Impact:

- Reduced duplication across departments.

- Unified compute pools instead of fragmented projects.

- Easier observability across model endpoints and budgets.

Enterprise Advantage:

AI-native architecture improves reusability — building one core engine for summarization or recommendations and deploying it across multiple business units, reducing redundant spend.

7. Outcome-Based AI Procurement

By 2027, AI engagements will shift from time-based billing to outcome-based pricing.

Instead of paying per model call or engineer hour, enterprises will pay for measurable outcomes — accuracy, latency, or business KPIs.

Example:

- Paying $X per successful resolution in a support AI, not per API hit.

- Paying per approved report in a compliance summarization tool.

This shift ties budget directly to business performance, increasing accountability and long-term ROI visibility.

8. Sustainability and Green Compute Awareness

As AI workloads expand, energy efficiency becomes both a cost and brand concern.

Cloud providers now offer carbon-optimized compute pricing — favoring energy-efficient hardware and regions with renewable grids.

Future Outlook:

- Carbon-aware scheduling could cut 10–12% from overall compute costs.

- Expect sustainability metrics to appear in RFPs and budget audits.

Quokka Labs Recommendation: Align sustainability goals with cost goals — both reward efficient architecture and disciplined usage.

Key Takeaways & Final Thoughts

By now, it’s clear that Generative AI development cost isn’t a static figure — it’s a living, evolving framework shaped by your data, goals, and governance maturity.

Enterprises that treat AI as infrastructure, not experiment, gain both agility and cost predictability.

Here’s how to translate these insights into action.

1. Start with Cost Bands, Then Price Your Reality

Every AI initiative begins with directional ranges — $20K–$60K for pilots, $60K–$250K+ for mid-size apps, and $400K–$1M+ for enterprise programs.

But the real number depends on your business model, integration depth, and user volume.

Rule: Plan for the range first, then refine with your actual data, workflows, and compliance needs.

2. Know What Drives the Budget

The biggest cost drivers are not lines of code — they’re scope, model strategy, and data quality.

A clear use case reduces iteration cycles by half; a clean dataset can cut development cost by 30%; a right-sized model saves thousands in compute each month.

Keep these questions front-of-mind:

- Does every feature move a KPI?

- Does every dataset add measurable accuracy?

- Are we tracking token usage per outcome, not per session?

That’s how you turn budgeting into performance management.

3. Build with Guardrails from Day One

Governance, observability, and evaluation pipelines are not optional add-ons — they are long-term cost savers.

A system built with drift detection and access controls today avoids security remediation tomorrow.

At Quokka Labs, governance is part of architecture, not compliance paperwork.

Each deployment includes audit logging, ethical AI checks, and safety benchmarks to ensure your model grows responsibly with your business.

4. Adopt a Phased Delivery Mindset

AI development rewards iteration.

Launch, learn, and scale in measured increments — the MVP → Expansion → Optimization cycle ensures continuous ROI validation and avoids over-engineering.

- MVP: Validate one measurable outcome.

- Expansion: Integrate, monitor, and evaluate.

- Optimization: Automate retraining and scale governance.

This approach transforms what used to be a cost center into a repeatable investment cycle.

5. Align Cost with Business Value

AI maturity is not about owning the biggest model — it’s about proving business impact per dollar.

For every budget decision, ask: What metric will this improve?

Whether it’s resolution time, fraud detection accuracy, or operational throughput, value-anchored budgeting ensures technology spend always serves business growth.

6. Partner with Experts Who Think in Systems, Not Features

Most AI overruns happen when teams chase novelty over structure.

Partnering with an AI-native development company like Quokka Labs gives you both technical speed and cost transparency.

Our teams combine:

- Proven architecture accelerators

- Token-usage dashboards for real-time cost tracking

- Secure MLOps pipelines built for scale

We help enterprises build AI products that perform — efficiently, ethically, and measurably.

Your Generative AI Cost Blueprint

A tailored roadmap for your next AI initiative — built to balance innovation, scalability, and governance.

Get a transparent cost model, line-item breakdown, and risk-adjusted build plan specific to your use case.

Facebook

Facebook

Twitter

Twitter

LinkedIn

LinkedIn

Pinterest

Pinterest