Artificial intelligence has become a core driver of business operations, shaping decisions, automating processes, and influencing customer experiences across industries. With this expansion comes a parallel rise in risk. Systems are vulnerable to adversarial attacks, data breaches, and manipulation, all of which can disrupt operations and expose sensitive information. For this reason, AI security has become a critical requirement, protecting both infrastructure and the data that powers it.

Yet protection alone is not enough. Securing a system does not address questions of fairness, accountability, or lawful use. To manage these issues, organizations need structured oversight. A policy framework, or AI governance, defines how intelligent systems should be designed, monitored, and held accountable. It sets responsibilities, establishes ethical standards, and ensures compliance with evolving regulations.

When combined, these two elements create the foundation of compliance. Security prevents technical failures, while governance in AI oversight prevents legal and ethical ones. In the current scenario, companies are expected to prove that they can manage both together, balancing innovation with trust and regulatory responsibility.

In this guide, we will explore the principles, frameworks, and best practices that help enterprises stay compliant with AI security and AI governance.

Understanding AI Security

AI security focuses on protection. It defends data, models, and infrastructure from breaches, manipulation, and misuse through access controls, encryption, and continuous monitoring. These measures keep systems reliable and reduce the chance of disruption.

Understanding the AI Governance

AI governance establishes direction and accountability. It defines responsibilities, sets ethical boundaries, and ensures transparency in how intelligent systems are designed and applied. Security acts as defense, while governance provides guidance to keep operations lawful and responsible.

Organizations today are expected to show that both elements are in place. Standards such as GDPR, ISO 42001, and the EU AI Act serve as reference points. At the same time, common issues like hidden bias, unapproved tool usage, and limited expertise make compliance difficult to maintain.

Ethical and Operational Pillars of AI Governance in Businesses

Responsible governance rests on a set of guiding principles that connect ethics with day-to-day operations. These pillars help organizations design, deploy, and monitor intelligent systems with accountability.

- Fairness: Systems must deliver outcomes without favoring or discriminating against groups. Fairness requires constant testing and updates to remove bias from models.

- Transparency: Enterprises should explain how automated decisions are made. Clear documentation and accessible reporting increase user trust.

- Accountability: Every decision must have a responsible owner. Assigning clear roles ensures that failures or errors do not remain unaddressed.

- Reliability and Safety: Platforms should operate as intended across conditions. This includes resilience against failures, cyberattacks, or data quality issues.

- Privacy and Security: Protecting sensitive information is essential. Strong controls such as encryption and anonymization maintain compliance with regulations like GDPR or HIPAA.

- Adaptability: Governance frameworks must evolve as technology and laws change. Updating processes ensures that organizations stay compliant over time.

By following these pillars, companies integrate ethical expectations with operational standards. This balance creates a foundation for AI governance best practices, helping companies reduce risk, meet regulations, and build trust.

Who Oversees Responsible AI Governance in Businesses

Effective governance depends on leadership at every level. No single executive can manage it alone; instead, multiple roles share responsibility for keeping intelligent systems safe, fair, and compliant.

- CEO and Senior Leadership: They set the culture and signal that accountability is a priority. By prioritizing responsible governance, they create an environment where employees know that ethical use of technology is non-negotiable.

- Legal and Compliance Teams: These groups interpret laws and translate them into policies. They ensure systems align with regulations, reduce exposure to legal risk, and close gaps before deployment.

- Audit and Risk Functions: Independent teams validate data quality, test system integrity, and confirm that platforms work as intended without creating errors. An IBM Institute for Business Value study notes that nearly 80% of enterprises already dedicate a part of their risk function to monitoring AI and generative AI.

- Chief Financial Officer (CFO): Beyond numbers, the finance function measures the cost of governance, tracks the return on AI initiatives, and anticipates financial risks tied to compliance failures.

Governance works only when leaders act together. Executives must sponsor training, enforce policies, and encourage open communication so that responsible use becomes part of daily practice.

Why Businesses are Implementing AI Security and AI Governance

Organizations are not adopting AI security and governance just to check a compliance box. They are doing it because the stakes—financial, reputational, and regulatory—are higher than ever. A few core drivers stand out:

Global rules, such as the EU AI Act and GDPR, as well as data protection laws in the APAC region, demand strict safeguards. Non-compliance can lead to fines, bans, or loss of licenses.

- Customer Trust and Brand Reputation

Users want assurance that their data is safe and that systems make fair decisions. Strong governance signals accountability and builds trust, which directly impacts loyalty.

AI models can be manipulated, discriminating, or misused. Security controls reduce exposure to cyberattacks, while governance ensures decisions can be explained and defended.

Documented processes and governance frameworks reduce confusion between teams. Clear policies speed up deployment and avoid costly delays.

Companies that adopt security and governance early are better prepared for audits, certifications, and investor due diligence. This maturity often becomes a differentiator in the market.

AI Security and Governance Frameworks to Know

Enterprises that use advanced systems must align with recognized standards for both protection and oversight. Some frameworks emphasize AI security, while others focus on defining AI governance and responsible use of AI. Together, they provide the foundation for compliance.

AI Security Frameworks

NIST AI Risk Management Framework (RMF): Guides organizations in identifying and mitigating risks associated with artificial intelligence systems.

ISO/IEC 42001: A global management standard that encompasses resilience and technical safeguards.

GDPR (Security Articles): Requires strong data protection, encryption, and breach reporting.

HIPAA: Sets rules for safeguarding patient records in U.S. healthcare when digital tools are used.

PCI-DSS: Ensures cardholder data remains secure when intelligent platforms support payments or fraud detection.

These frameworks create the baseline for a practical AI governance framework, linking defense with structured rules.

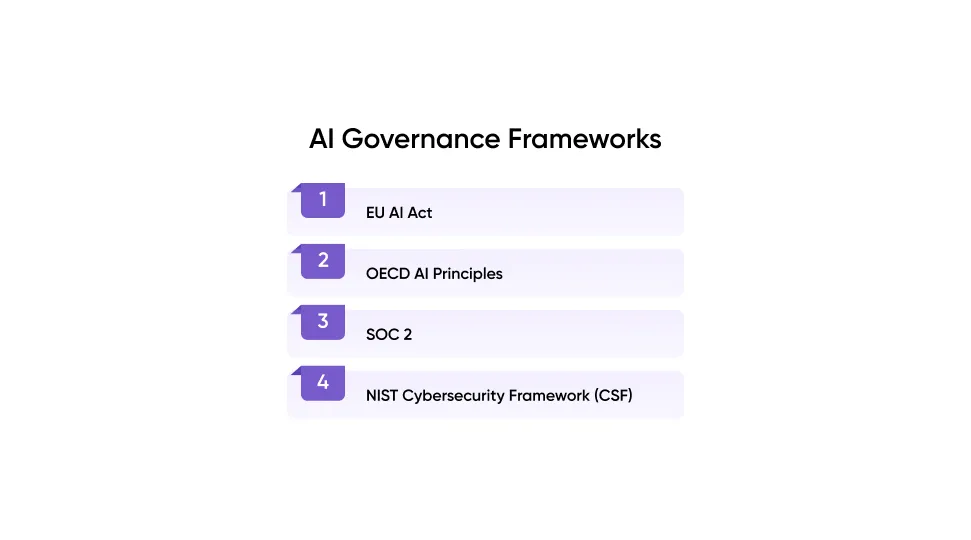

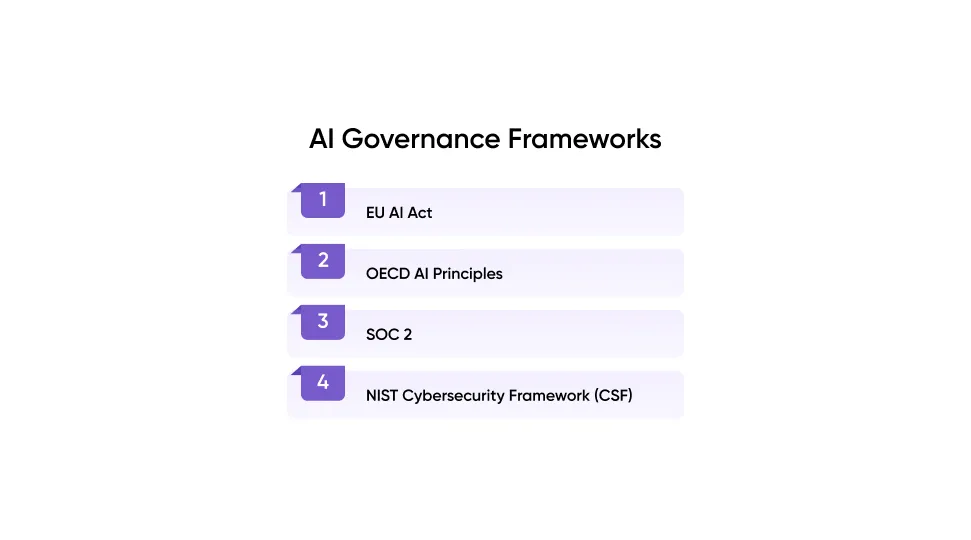

AI Governance Frameworks

EU AI Act: Classifies systems by risk level and sets obligations for high-risk applications such as healthcare and finance.

OECD AI Principles: Provide international guidance on fairness, accountability, and transparency.

SOC 2: Offers audit standards for service providers handling sensitive data.

NIST Cybersecurity Framework (CSF): Broader than artificial intelligence alone, but often applied to align system use with enterprise risk management.

Together, these frameworks provide the foundation for AI regulatory compliance, ensuring security and governance work side by side

How to Build Your AI Governance Foundation

A strong AI governance framework needs structure and shared accountability. Policies alone are not enough; organizations must put transparent processes and responsibilities in place to connect governance with daily practice.

Form an AI Governance Committee

Every enterprise benefits from a dedicated committee that oversees the responsible use of intelligent systems. This group defines priorities, approves policies, and reviews risks before projects go live.

For example, many banks now run AI review boards that monitor fraud detection tools to check both accuracy and fairness.

Assigning Stakeholder Roles and Responsibilities

Governance only works when everyone knows their role.

1. Executive Leadership

CEOs and senior leaders set the tone for responsible use. They provide direction, sponsor initiatives, and ensure that accountability becomes an integral part of the company culture.

2. Legal and Compliance Teams

These teams interpret regulations and apply them to policies. They assess legal risks, ensure AI compliance, and confirm that systems operate within existing laws.

3. Security and IT Teams

Security leaders safeguard infrastructure and data. They implement encryption, monitoring, and other technical measures that form the base of AI security.

4. Data Science and Product Teams

Model developers and product managers make sure that algorithms reflect fairness, transparency, and usability. They connect technical design with governance requirements.

5. Audit and Risk Teams

Independent risk units validate system integrity. They perform audits, monitor key controls, and verify that outcomes align with intended objectives.

Developing Core Policies for AI Security, Data, and Ethics

Policies serve as the rulebook for responsible use. They should explain how teams collect, process, and store data, define minimum security requirements, and outline ethical expectations such as avoiding bias or ensuring explainability. Well-written policies reduce ambiguity and keep projects aligned with both AI security needs and regulatory expectations.

Designing Governance Framework Processes and Decision Flows

Strong governance connects policies to real workflows. Organizations should map decision flows from design to deployment, adding checkpoints where risk reviews and approvals take place. This makes governance an integral part of the development lifecycle, rather than an afterthought. A common practice is requiring independent validation before launching any high-risk application.

Implementing Communication and Training Programs

Rules matter only when people understand them. Training sessions and awareness programs help employees learn how governance applies to their role. Clear communication channels also encourage staff to raise concerns early, reducing the chance of compliance failures. Over time, this builds a culture where AI compliance becomes a shared responsibility.

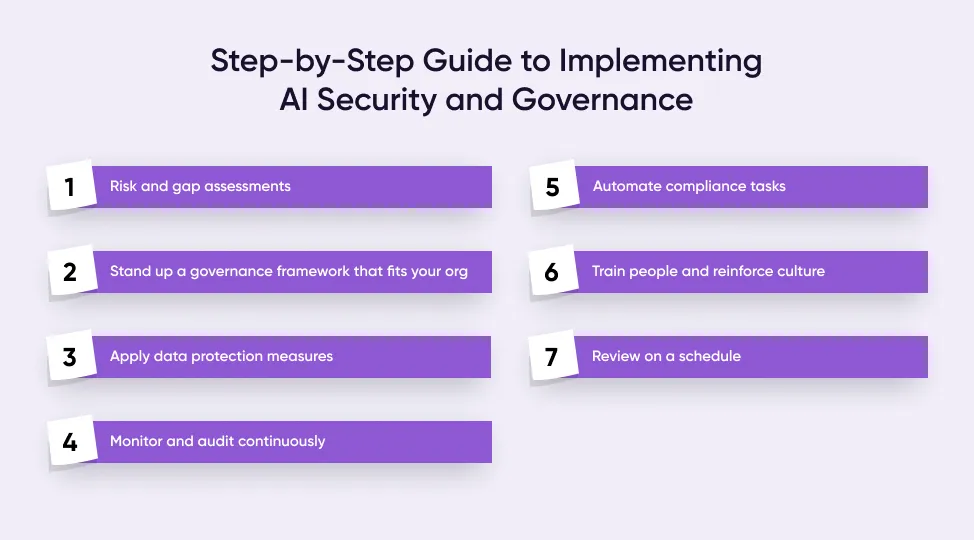

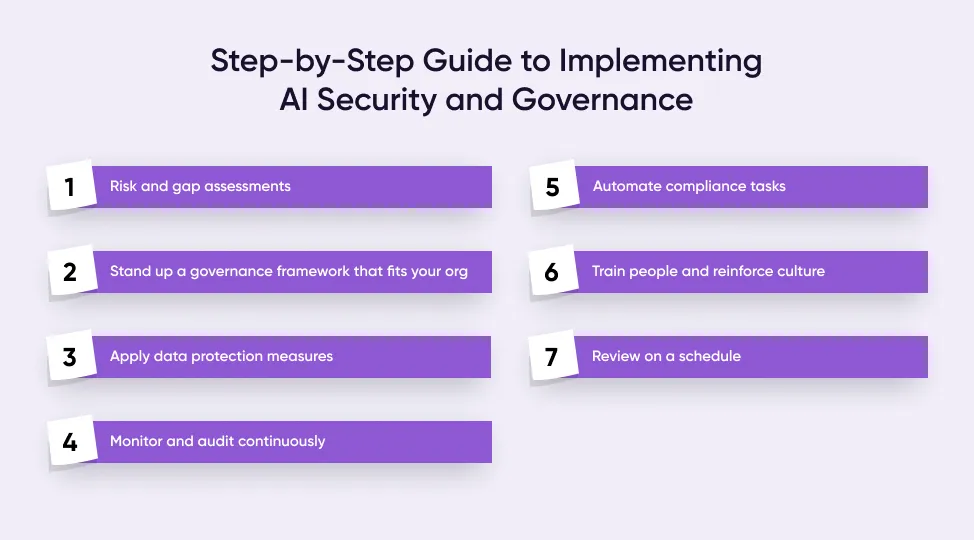

Step-by-Step Guide to Implementing AI Security and Governance

Turn the foundation into a daily practice with a clear rollout. Move through these steps in order, and keep a simple record of ownership, dates, and evidence for audits.

Risk and gap assessments. Inventory models, data flows, and third-party tools. Identify misuse scenarios, privacy exposures, and failure modes, then rank the risks and select controls that minimize their real impact. Use risk frameworks to structure this work.

Stand up a governance framework that fits your org. Define decision rights, approval gates, and documentation (model cards, DPIAs, testing summaries). Align your approach with recognized guidance so projects follow the same path from design to deployment.

Apply data protection measures. Enforce access controls, encryption at rest and in transit, and masking/anonymization where you can. Pair this with repeatable data-quality checks and secure file movement for sensitive pipelines.

Monitor and audit continuously. Track model performance, keep logs, enable alerts for abnormal behavior, and maintain audit trails to demonstrate what ran, when, and why. Dashboards and health scores help teams spot issues fast.

Automate compliance tasks. Use policy templates, control libraries, and reporting tools to reduce manual work. Standardize your security policy set and link evidence (tests, scans, reviews) to each control to prevent audits from stalling.

Train people and reinforce culture. Brief executives on accountability, teach builders how to test fairness and robustness, and educate staff about safe tool use. Regular refreshers cut shadow-tool risk and improve outcomes.

Review on a schedule. When regulations evolve or a system changes, re-assess risks, update policies, and re-approve. Make reviews a regular habit, not a one-time task. This approach ensures a smooth AI implementation and maintains compliance over time.

This sequence translates policy into action, supports AI governance in real-world projects, and enhances AI security where it matters most—production systems and real-world users.

How Organizations are Deploying AI Governance and AI Security

Enterprises across various industries are moving beyond policies on paper and started integrating security and governance into their workflows. Deployment usually happens through a mix of structured processes, oversight bodies, and technology tools.

- Dedicated Governance Committees

Many organizations have created cross-functional boards that review high-risk AI projects. These boards approve deployment only after systems pass compliance and fairness checks.

- Embedded Risk Assessments

Security and compliance checks are now integrated into the model development lifecycle. Before release, teams test models for bias, resilience, and compliance with regional rules.

Companies deploy encryption, access controls, and monitoring systems to protect sensitive data. Automated alerts flag unusual activity, reducing response time to risks.

- Audit and Documentation Practices

Firms maintain detailed logs, model cards, and transparency reports. This not only helps meet regulatory requirements but also makes it easier to prove accountability during audits.

Regular employee training ensures that governance is not limited to IT or legal teams. Staff across departments are learning how to spot risks, follow protocols, and escalate concerns.

Not every organization has the in-house expertise to build secure and compliant systems. That’s why many enterprises choose to partner with AI development services companies to design governance frameworks, deploy monitoring tools, and manage compliance at scale.

Common Challenges in AI Security and Governance Compliance

Even with policies in place, enterprises often face obstacles that slow down the adoption of secure and responsible practices. The most common challenges include:

- Complex Rules Across Borders: Multinational companies must follow privacy and data protection laws. Overlapping demands from European laws, healthcare regulations, and new risk-based directives often create uncertainty.

- Bias in Decision-Making: Smart systems may deliver unfair outcomes, making generative AI governance essential to enforce fairness standards.

- Unauthorized Tool Usage: Development, IT, or business teams sometimes use platforms without official authorization, leading to security gaps and potential compliance risks.

- Limited Resources: Smaller organizations struggle with budgets and staffing, making it challenging to maintain mature governance and security programs.

- Evolving Threats: Attackers continually develop new methods to exploit LLMs, leading organizations to upgrade their defenses more rapidly.

These challenges make AI regulatory compliance harder to sustain, especially for enterprises operating across the globe. Enterprises that prepare for them can design stronger governance in AI strategies and more resilient security programs.

Advanced AI Security Controls and Monitoring Practices

Basic precautions keep systems stable, but advanced controls make them resilient against new risks. Enterprises can strengthen their programs by:

1 Real-Time Tracking and Monitoring

Track models continuously rather than waiting for periodic reviews. Dashboards can flag drift in accuracy, unfair outcomes for certain groups, or spikes in error rates. Teams can then retrain models or adjust inputs before they compromise any compliance rules.

2 Automated Anomaly Detection and Alerting Systems

Security tools can scan system behavior in real time and raise alerts when patterns deviate from the norms.

For example, a fraud detection model that suddenly produces far more “approved” outcomes than usual can trigger a warning for investigation. Automated alerts cut response time and help prevent misuse.

3 Maintaining Audit Trails and Compliance Documentation

A clear record of every system change, access event, and decision is essential. Detailed audit logs enable organizations to demonstrate accountability, track outcomes, and resolve disputes. Storing documentation such as model cards, testing reports, and approval notes also simplifies regulatory reviews.

4 Integration with Enterprise Risk Management Systems

AI oversight is most effective when it integrates with the broader enterprise risk management program. Linking governance metrics with company-wide dashboards helps executives see technology risks alongside financial, operational, and compliance risks. This integration ensures that leadership treats AI risks with the same priority as other core business risks.

Sector-Specific Compliance Considerations

Organizations across various industries already implement governance practices in their day-to-day operations. A few examples include:

- Fintech: Global banks use AI security committees to review fraud detection models. They verify the absence of bias in decision-making and ensure compliance with financial regulations.

- Healthcare: Hospitals apply GDPR and HIPAA together to secure patient data. They review how diagnostic tools handle sensitive records before allowing them to be deployed.

- Technology Firms: Large tech companies set up internal review boards. These groups test products for ethical risks and approve only when systems meet fairness and transparency standards.

- E-commerce: The retail and marketing team tracks how recommendation engines (the systems that suggest products to customers) use customer data to suggest products. The AI Governance team creates rules to ensure this data use complies with privacy laws like GDPR or CCPA, protecting customer data and ensuring legal compliance.

- Education: Universities use governance rules when adopting automated grading tools. Committees monitor whether results remain fair and unbiased for all students.

- Government Services: Public agencies deploy governance checks on automated decision-making systems. They follow guidelines such as Canada’s Directive on Automated Decision-Making to keep processes accountable.

- Manufacturing: In manufacturing, companies use ISO/IEC standards to ensure that their predictive maintenance systems (systems that predict when equipment might fail) are safe, reliable, and compliant with industry regulations. These standards help avoid breakdowns and keep operations running smoothly.

These examples show that governance works best when companies embed it into business processes rather than treat it as a one-time requirement.

Global Regulations Shaping AI Governance

Across the world, regulators are establishing rules to ensure intelligent systems are safe, fair, and accountable. While approaches differ by region, these laws and guidelines together define how organizations achieve responsible AI governance and compliance.

OECD Guidelines for Trustworthy AI

The OECD AI Principles, released in 2019, remain one of the most influential frameworks for governance. They focus on:

- Fairness and non-discrimination

- Transparency in decision-making

- Accountability for outcomes

- Human-centric oversight

- Robustness and security

In 2025, these principles are far from outdated. Over 40 countries, including all G20 members, have adopted them as a foundation for national AI policies. Even corporate governance programs often borrow directly from this framework, showing how early standards continue to shape today’s compliance strategies.

United Kingdom: Pro-Innovation AI Regulation Approach

The UK has opted for a sector-led model instead of one central law. Regulators in finance, healthcare, and transport apply their own rules, guided by common principles of safety, transparency, and accountability. In 2025, the government has doubled down on this flexible approach, encouraging innovation sandboxes while still requiring responsible oversight across industries.

The EU AI Act and Voluntary Code

The EU AI Act is the first whole law to regulate AI across an entire region. It classifies systems by risk, requiring stricter obligations for high-risk applications, such as healthcare, employment, and financial services.

In early 2025, the EU also launched a voluntary Code of Practice to help companies prepare ahead of mandatory enforcement in August 2025. This shows how Europe not only writes rules but also supports organizations in achieving AI compliance before penalties take effect.

Canada: Directive on Automated Decision-Making

Canada’s Directive on Automated Decision-Making focuses on how public agencies use algorithms. It requires officials to complete an Algorithmic Impact Assessment (AIA) before deploying systems, test for bias, and ensure human review in sensitive decisions. In 2025, Canada continues to refine this directive, with agencies publishing transparency reports that other governments now use as templates for their own governance frameworks.

The United States: SR-11-7 and State-Led Rules

In the U.S., governance has developed in layers. The Federal Reserve’s SR-11-7 model risk guidance, originally for banks, now informs how enterprises manage machine learning models more broadly—covering validation, monitoring, and documentation.

By mid-2025, a federal AI strategy shifted power back to states, allowing them to keep experimenting with their own laws. States like California and New York have advanced rules around chatbots, bias audits, and algorithmic transparency. This mix of federal guidance and state-level rules makes the U.S. governance approach fragmented but highly active.

Europe’s Expanding AI Regulation

Beyond the EU AI Act, individual countries are introducing their own oversight measures. France and Germany are drafting stricter transparency obligations, while Spain has launched an agency to monitor algorithms in public administration. Combined with the Council of Europe’s 2024 Treaty on AI and Human Rights, Europe now leads globally in binding, multi-layered governance.

AI Governance in the Asia-Pacific Region

APAC countries have taken diverse approaches:

-

Singapore enforces a Model AI Governance Framework focused on explainability.

-

Japan prioritizes public trust and international cooperation.

-

Australia follows voluntary ethical principles while exploring stricter laws.

-

India combines the DPDP Act, Responsible AI guidelines, and a new AI Safety Institute (2025) to balance rapid adoption with emerging safeguards.

-

In 2025, China proposed a UN-led global governance body to coordinate international standards and set the direction for generative AI governance.

This variety reflects regional priorities, but all approaches aim to combine rapid innovation with responsible safeguards.

Preparing for the Future: Emerging Trends in AI Governance and Compliance

AI governance is not static—rules, risks, and expectations will keep changing. Organizations that look ahead can adapt faster and avoid costly adjustments later. Key trends shaping the next phase include:

- Global Convergence of Rules: Regional laws, such as the EU AI Act and Canada’s directive, as well as models in the Asia-Pacific region, will increasingly align, creating more consistent global standards. Enterprises must prepare to comply across borders.

- Governance for Generative AI: Tools that generate text, images, and code introduce new risks related to bias, copyright, and misinformation. Regulators are now drafting rules focused on generative AI governance, ensuring these systems remain transparent and accountable..

- Scalable Frameworks: Static policies will not be enough. Companies will need adaptable governance frameworks that update quickly as technology, threats, or laws change, making AI implementation more flexible and resilient.

- Integration with ESG Goals: Investors and customers now expect responsible AI practices as part of environmental, social, and governance (ESG) commitments. Governance will expand beyond compliance to brand reputation and stakeholder trust.

- Stronger Role of Independent Oversight: Independent audits, certifications, and safety testing institutes will become more common. This shift will make compliance visible and verifiable, not just internal, ensuring transparency and accountability.

Forward-looking organizations are already piloting these practices, treating governance as a competitive advantage rather than a regulatory burden. Those who invest now will find it easier to navigate future laws and build lasting trust.

Build Responsible AI Frameworks with Quokka Labs

Securing AI systems against threats and proving to regulators and customers that those systems are used responsibly. Policies and controls must work hand in hand to keep adoption safe, transparent, and future-ready.

Quokka Labs, an AI development service company, helps organizations translate these goals into practice. From drafting security and governance policies to embedding monitoring and compliance into the development lifecycle, our team ensures businesses can innovate confidently without falling behind regulatory expectations.

Tags

AI compliance

AI governance

AI security

ai implementation

Artificial Intelligence

Facebook

Facebook

Twitter

Twitter

LinkedIn

LinkedIn

Pinterest

Pinterest